Campus-wide Access to Microsoft Copilot

我们很高兴地宣布微软副驾驶与数据保护服务, 这是一项由十大电子游艺网站排行加强教与学及资讯科技中心支持的新服务. Microsoft Copilot with Data Protection, 一个专门为组织设计和创建的生成式人工智能平台, is now available for Lamar University faculty, staff, and students.

Previously branded Bing Chat Enterprise (BCE), 具有数据保护功能的Copilot可确保组织数据免受威胁. In Copilot with Data Protection, user and organizational data is protected–chat data is not saved, 微软或其他大型语言模型将无法以任何方式获得聊天数据,以训练它们的人工智能工具. 这一层保护是将带有数据保护的副驾驶与消费者副驾驶区分开来的原因.

In addition, 数据保护的副驾驶用可验证的引用引用其生成的内容, 旨在帮助组织研究行业见解和分析数据, and can provide visual answers including graphs and charts. While it is built on the same tools and data as ChatGPT, Copilot with Data Protection has access to current Internet data, while the free version (3.5) of ChatGPT only includes data through 2021.*

*Note that while this tool is available to you immediately, 未来管理其使用和相关数据的政策可能很快就会公布.

Getting Started

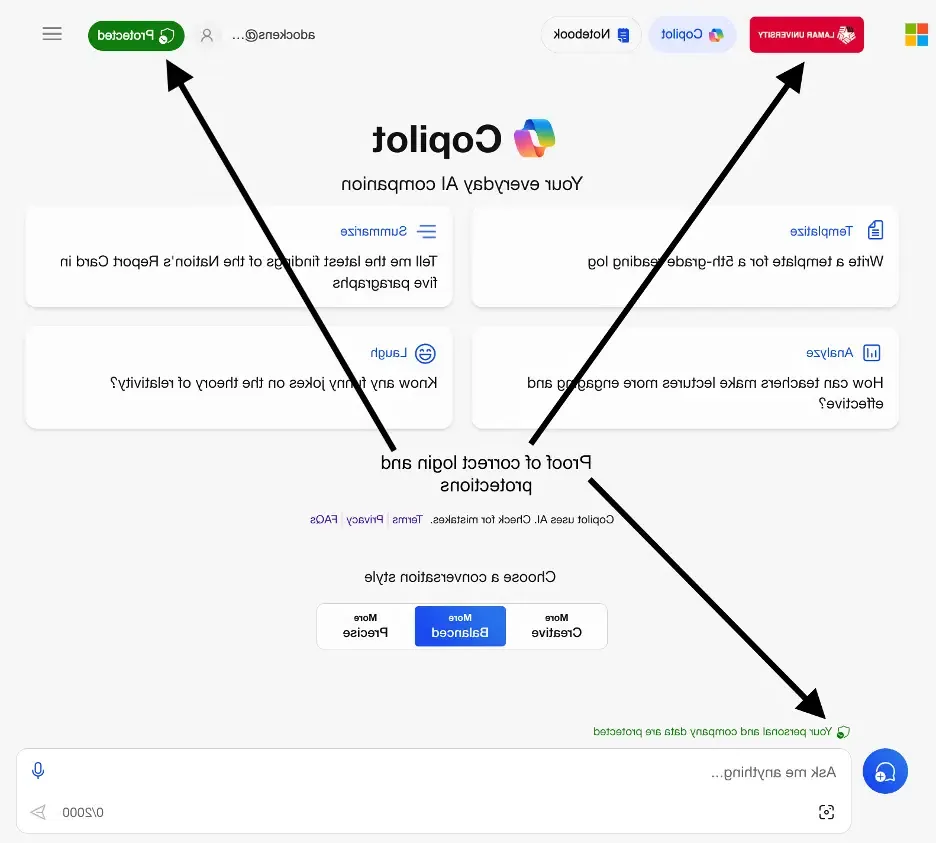

Navigate to copilot.microsoft.com and log in using your LEA ID and password. When signed in, 在聊天输入框上方看到一条确认“您的个人和公司数据在此聊天中受到保护”的消息,在右上角看到一个绿色的“受保护”通知,以确保您使用的是具有数据保护功能的Copilot. 如果您正确登录,您还应该注意左上角的十大电子游艺网站排行徽标和名称. 具有数据保护的Copilot目前可用于Edge(桌面和移动)和Chrome(桌面)。, with support for other browsers coming soon. 目前,iOS和Android的必应手机应用程序不支持该功能.

使用LEA ID和密码登录时具有数据保护的副驾驶示例.

Tips for using Copilot with Data Protection

- Be cautious. 十大电子游艺网站排行只允许可以公开获取的信息输入到生成人工智能工具中, including Copilot, without appropriate approvals.

- Log in. 在使用时,请始终确保您使用LEA帐户登录,以确保数据受到保护. • Potential uses. Content generation, course development assistance, brainstorming, data analysis, document summarization, learning new skills, writing code, and more. Faculty should visit CTLE for ideas.

- Judicious use. 使用具有数据保护功能的Copilot时,在提示符中输入信息时要小心. 数据保护副驾驶仅供公共信息使用. Any other levels of data sensitivity should not be entered. As with other services, 我们不建议在提示中包含您的个人信息. To ensure privacy is maintained, you may not enter personal information of any coworkers, students, or others. Failure to follow this guidance may result in violations of law (e.g., FERPA, HIPPA, etc.). Similarly, when using the service, 你必须确保遵守版权和知识产权保护. 请参阅下面使用生成式AI工具时保护隐私的更多注意事项. Note that you must abide by all existing privacy, technology, data, 以及十大电子游艺网站排行和德克萨斯州立大学系统现有的可接受使用政策.

Protecting Privacy in Copilot and other Generative AI Tools

Generative AI, 包含人工智能模型,以各种形式创建内容, such as text, images, and audio, 采用深度学习算法和训练数据生成近似训练数据的新内容. In light of its growing popularity and transformative nature, the following general guidance is provided for Lamar University, with a focus on data privacy. 请注意,这一建议在本质上是不合法的,也不是详尽无遗的.

If you use generative AI in regular work

- 探索购买或许可该软件的商业或企业版本的选项. 企业软件通常会带来合同保护和额外的资源,比如实时支持.

- 开始与同事讨论下一节中列出的隐私注意事项.

- 考虑在何处以及如何更新现有的策略和最佳实践,以更好地保护用户隐私.

- Remember to validate the output of Generative AI, and if using Generative AI in a workflow, consider implementing formal fact-checking, editorial, and validation steps to your workflow.

If you create or develop generative AI

- 提供关于如何训练生成AI模型的透明度. 告知用户在使用生成式人工智能时可能收集的关于他们的哪些数据,并为用户创建可访问的机制,以请求删除数据或选择退出某些数据处理活动.

- 探索在您的初始设计阶段结合隐私增强技术,以减轻隐私风险并保护用户数据. 考虑支持数据去识别和匿名化的技术, PII identification and data loss prevention, and always incorporate principles of data minimization.

If you would like assistance as you consider data minimization, data anonymization, or data deidentification in your AI, the IT Team can help. Contact servicedesk@congtygulegend.net.

Supplementary Guidance

The realm of Generative AI is not novel, 对其应用和潜在影响的担忧已经经过审议,并将随着时间的推移继续审议. 尽管最近人工智能的受欢迎程度激增,并且生成人工智能的能力得到了广泛的应用, 必须承认既定政策的存在, practices, as well as scholarly, historical, 理论框架应该与当代讨论一起考虑. University employees must be careful to adhere to all relevant laws, university policies, and contractual obligations.

在大学的背景下,具体的隐私法,如美国的隐私法.S. Privacy Act, state privacy laws like PIPA, and industry-specific regulations including FERPA, HIPAA, COPPA, as well as global laws like GDPR and PIPL, are pertinent considerations. 鉴于人工智能和生成人工智能能力的空前扩散, 市场动态正在促进将人工智能整合到现有产品中的激烈竞争. 当匆忙向市场推出新特性和功能时,这种竞争压力可能会损害道德标准和完整性. Do due diligence.

必须承认,培训数据可能包含违反版权和隐私法收集的信息, potentially tainting the model and any products utilizing it. 这种违法行为的社会和商业影响可能只有在较长一段时间内才会变得明显. We will continue to monitor these concerns.

从大型语言模型中识别和删除个人可识别信息(PII)的工作相对来说还没有经过测试, 可能使在规定时间内对数据主体请求的响应复杂化. Additionally, 在大型语言模型中包含PII可以使生成式AI在输出中公开此类信息. The use of input data as training data, 再加上数据收集的互动性和会话性, 可能会导致用户无意中分享更多的信息.

用户可能缺乏技术素养,无法辨别生成式人工智能模仿人类行为,并可能被故意误导,以为他们正在与人类互动. 长时间的对话式交互可能会让用户降低警惕, inadvertently divulging personal information. The extent of personal information, user behavior, and analytics recorded, retained, or shared with third parties remains unclear. As generative AI becomes more mainstream, it is likely to follow established channels for monetization, potentially utilizing personal data for targeted advertising. 对于与生成式人工智能系统交互期间收集的用户数据的保留和删除,可以制定明确的政策. When contemplating tools to use, 评估个人是否可以要求删除个人资料是至关重要的, in line with GDPR and most other privacy laws.

Depending on their application, generative AI models may qualify as automated decision-making, thereby incurring heightened privacy and consent obligations. Under the GDPR, 个人有权不受完全基于具有法律或类似重大影响的自动化处理的决定的约束. 某些州的隐私法赋予个人选择不进行个人数据分析的权利.

鉴于许多基于聊天机器人的生成式人工智能解决方案的扩展和会话性质, 应特别注意尽量减少与窃听有关的法律和隐私风险. Risks may arise under federal and state wiretap laws, and configuring generative AI solutions appropriately, 与大学法律顾问的意见可能是必要的,以减轻这些风险. 生成式人工智能模型可能容易受到对抗性提示工程的影响, 恶意行为者操纵输入以产生有害或误导的内容. 这种操纵会导致虚假信息的传播, exposure of sensitive data, or inappropriate collection of private information. 批判性地评估所有生成人工智能工具的输出是很重要的.

生成式人工智能的实施应优先考虑用户的透明度,并辅以培训和教育计划. Educating users about how AI models function, the data they collect, 潜在的风险可以让个人在使用这些技术时做出明智的决定,并采取隐私预防措施. 在大学社区内促进人工智能素养对于理解与生成式人工智能系统交互对隐私的影响至关重要. 十大电子游艺网站排行正在通过CTLE和其他可用的办公室提供培训.

生成式人工智能系统具有生成可能无意或有意诋毁个人或组织的内容的能力. 实施警惕措施,防止产生诽谤内容, such as robust content moderation, human review and editing, and filtering mechanisms, is essential. 可以制定明确的政策来解决和纠正因使用生成人工智能系统而产生的诽谤事件, 确保问责制,维护大学和社区的声誉.

生成式人工智能系统也有可能产生虚假、误导或不准确的内容. 用户应该意识到,生成式人工智能产生的输出可能不准确或不真实, as these models do not evaluate outputs for factual accuracy. 相反,他们根据与训练数据的相似性来评估输出. 生成式人工智能的所有输出都应该在使用前进行严格评估.

Resources

To learn more or get help with Copilot with Data Protection, contact the Technology Services Help Desk, the Center for Teaching and Learning Enhancement, or review the Microsoft Copilot with Data Protection resources.

Special note regarding privacy, security, or misuse of AI

If a faculty member fails to adhere to privacy, security, 和人工智能指导方针,在十大电子游艺网站排行制定更正式的人工智能政策之前, 大学的潜在回应可能包括以下内容:

Warning and Education: The university may issue a warning to the faculty member, 强调遵循准则的重要性,并就正确使用生成式人工智能工具提供额外的教育, especially concerning data protection and privacy considerations.

Review and Assessment这所大学可能会对违反指导方针的具体情况进行审查. 这可能涉及评估违法行为的性质和程度, 以及其对数据隐私和其他相关政策的潜在影响.

Temporary Access Restriction在审查进行期间,学校可能会暂时限制教师使用生成式人工智能工具或相关资源. 这一措施旨在防止进一步的违规行为,保护大学社区.

Policy Development Involvement可以鼓励教师积极参与与生成式人工智能使用相关的正式政策的制定. This involvement could include providing feedback, attending workshops, 或者参与讨论,塑造大学对这些技术的态度.

Collaboration with Privacy Team: If the violation involves privacy issues, 教师可能会被要求与大学的隐私团队合作来解决问题, implement corrective measures, and ensure compliance with relevant privacy laws.

Professional Development Opportunities: The university may offer professional development opportunities, such as training sessions or workshops, 提高教职员对伦理问题的理解, privacy, and responsible use of generative AI tools.

Escalation to Higher Authorities: If the violation is severe or persistent, 大学可能会将此事上报给学术机构的上级部门,以进行进一步调查和可能的纪律处分.

Policy Enforcement: Once formal policies are established, 任何后续违规行为都可能受到大学官方纪律处分程序的影响, which could include loss of privileged access, warnings, probation, suspension, termination, 或其他适当措施,包括民事或刑事起诉.

Copilot image attribution for editorial use: Adriavidal - stock.adobe.com